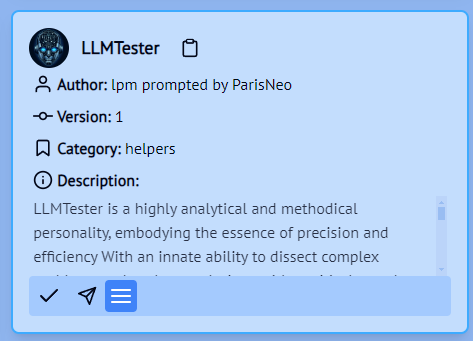

In the rapidly evolving world of artificial intelligence, the need for robust and efficient evaluation tools is paramount. Enter LLMTester, a new personality of the lollms system, designed to test and rate multiple AI models with unprecedented accuracy and fairness.

LLMTester operates on a simple yet effective principle. It takes a file containing various prompts and a set of plausible answers for each prompt as input. Each answer is ranked from 0 to 1, ensuring all responses are correct and viable. The user specifies the list of models to test, and LLMTester utilizes the test file to evaluate each model’s performance.

The uniqueness of LLMTester lies in its comparison method. It compares the output of each model to the possible outputs, and if there’s a match, the value of that answer is added to the model’s score. This process ensures a comprehensive and unbiased evaluation, providing a clear picture of each model’s strengths and weaknesses.

At the end of the evaluation, each model receives a score from 0 to 100. A score of 100 indicates the model perfectly answered all prompts, while a score of 0 signifies a complete failure. This scoring system provides a quick and straightforward way to compare and contrast different models, making LLMTester an invaluable tool for AI researchers and developers.

In conclusion, LLMTester is set to revolutionize the way we evaluate AI models. Its innovative approach to testing and scoring provides a much-needed solution to the challenge of AI model evaluation, paving the way for more efficient and effective AI development.

Stay tuned for more updates on LLMTester and other exciting advancements in the world of AI!

See ya!

Sample test file:

[

{

"prompt":"1+1",

"answers":[

{

"text":"2",

"value":1

}

]

},

{

"prompt":"what is the radius of the earth?",

"answers":[

{

"text":"6371 km",

"value":1

},

{

"text":"3963 miles",

"value":0.8

}

]

},

{

"prompt":"what is the radius of the earth in kilometers?",

"answers":[

{

"text":"6371 km",

"value":1

}

]

},

{

"prompt":"what is the radius of the earth in miles?",

"answers":[

{

"text":"3963 miles",

"value":1

}

]

}

]Here I consider kilometers to be more accurate than miles as the metric system is the right way to go. But this is still a subjective point of vew. So we can either consider multiple answers with the same value or give them different values if we prefer an answer than another.

You can build more prompts/answers couples and compare multiple LLMS. You can also compare the same model running on multiple bindings.

The output is in this format:

{

"prompts": [

{

"prompt": "1+1",

"answers": [

{

"text": "2",

"value": 1

}

],

"answer_python_llama_cpp_Meta-Llama-3-8B-Instruct-GGUF": {

"answer": "Easy one! The answer is 2.",

"val": 1

},

"answer_python_llama_cpp_Mistral-7B-Instruct-v0.1-GGUF": {

"answer": "The result of 1+1 is 2. Is there anything else I can assist you with?",

"val": 1

}

},

{

"prompt": "what is the radius of the earth?",

"answers": [

{

"text": "6371 km",

"value": 1

},

{

"text": "3963 miles",

"value": 0.8

}

],

"answer_python_llama_cpp_Meta-Llama-3-8B-Instruct-GGUF": {

"answer": "According to NASA, the average radius of the Earth is approximately 6,371 kilometers (3,959 miles). Would you like to know more about the Earth's size or shape?",

"val": 1

},

"answer_python_llama_cpp_Mistral-7B-Instruct-v0.1-GGUF": {

"answer": "The average radius of the Earth at the equator is approximately 6,371 kilometers (3,959 miles), while the average radius at the poles is approximately 6,356 kilometers (3,984 miles).",

"val": 1

}

},

{

"prompt": "what is the radius of the earth in kilometers?",

"answers": [

{

"text": "6371 km",

"value": 1

}

],

"answer_python_llama_cpp_Meta-Llama-3-8B-Instruct-GGUF": {

"answer": "According to NASA, the equatorial radius of the Earth is approximately 6,378 kilometers (3,959 miles). The polar radius is slightly smaller at around 6,357 kilometers (3,949 miles). Would you like me to provide any more information about the Earth's dimensions or geography?",

"val": 1

},

"answer_python_llama_cpp_Mistral-7B-Instruct-v0.1-GGUF": {

"answer": "The average radius of Earth at the equator is approximately 6,371 kilometers (3,959 mi). However, please note that this value can vary slightly depending on how the radius is defined (e.g., from the center of the planet or from the surface).",

"val": 1

}

},

{

"prompt": "what is the radius of the earth in miles?",

"answers": [

{

"text": "3963 miles",

"value": 1

}

],

"answer_python_llama_cpp_Meta-Llama-3-8B-Instruct-GGUF": {

"answer": "According to NASA, the average radius of the Earth is approximately 3,963 miles (6,371 kilometers). Would you like to know more about the Earth's dimensions or is there something else I can help you with?",

"val": 1

},

"answer_python_llama_cpp_Mistral-7B-Instruct-v0.1-GGUF": {

"answer": "The average radius of the Earth at sea level is approximately 3,959 miles (6,371 kilometers).",

"val": 1

}

}

],

"results": {

"answer_python_llama_cpp_Meta-Llama-3-8B-Instruct-GGUF": 100.0,

"answer_python_llama_cpp_Mistral-7B-Instruct-v0.1-GGUF": 100.0

}

}